Table of Contents

Message Passing Interface (MPI)

MPI is a standardized message-passing standard for parallel computing. Actively developed over more than 25 years, MPI is a robust standard with multiple implementations and widespread use, in particular in supercomputing clusters. In addition, the MPI runtime handles many aspects of program setup, like launching tasks on each computing resource and selecting the communication type, whether shared memory, InfiniBand, TCP or others. This widespread accessibility and ease of use in a cluster environment makes it the default choice for use in SynChrono.

In MPI-terminology, each computing resource is called a rank, and in SynChrono each rank will typically handle a ChSystem. This ChSystem may have one or more vehicle agents, deformable terrain, or other actors, and the MPI rank is responsible for sharing the state data from its ChSystem with all other ranks and receiving state data from the other ranks in return.

SynChrono Implementation

MPI-based synchronization in SynChrono happens each heartbeat with two MPI calls. First, all ranks communicate how long their message is with MPI_Allgather. While in many scenarios ranks will always send the same length of messages each heartbeat (this is the case for vehicles on rigid terrain), in others (such as with deformable terrain) there is no way to know how long messages will be so this information must be communicated to each rank. After the MPI_Allgather, each rank executes a variable length gather, MPI_Allgatherv to retrieve message data from all other ranks.

Data Distribution Service (DDS)

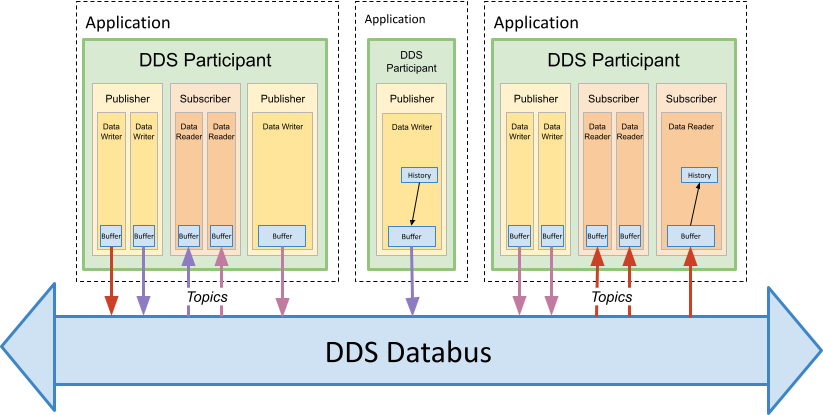

DDS is a more recent message-passing standard built around a Real-Time Publish-Subscribe (RTPS) pattern. Whereas communication in MPI happens rank-to-rank, communication in DDS happens via topics that are published and subscribed to.

There are many DDS implementations, the most popular being published by Real-Time Innovations (RTI). Keeping with the open source philosophy of Project Chrono, FastDDS was selected as the vendor for the first release of this interface.

SynChrono Implementation

The figure below illustrates the general DDS communication scenario. For SynChrono, each participant corresponds to a node, with a single ChSystem. Data synchronization happens via publishers and subscribers, both of which are wrapped at the SynChrono level to provide a SynChrono-specific API for passing FlatBuffer messages. There is a unique topic for each agent in the simulation, and each agent manages a publisher for sharing its data along with a subscriber for receiving data from other ranks. One level down, data readers and data writers manager the communication between each pair of agents. If there are five nodes in the simulation, each agent will have four data readers to receive data from other nodes.

Please see here for more details about DDS.

The SynChrono usage of DDS is meant to be as minimal as possible. A SynChronoManager has a handle to a single SynCommunicator. Similar to MPI, a SynDDSCommunicator must be created with the aforementioned classes to facilitate message passing. SynChrono level classes wrap the DDS concepts and provide accessors or setters to manipulate their configuration or create new entities. SynDDSPublisher, SynDDSSubscriber, SynDDSTopic and more wrap DDS concepts to expose simple SynChrono API.